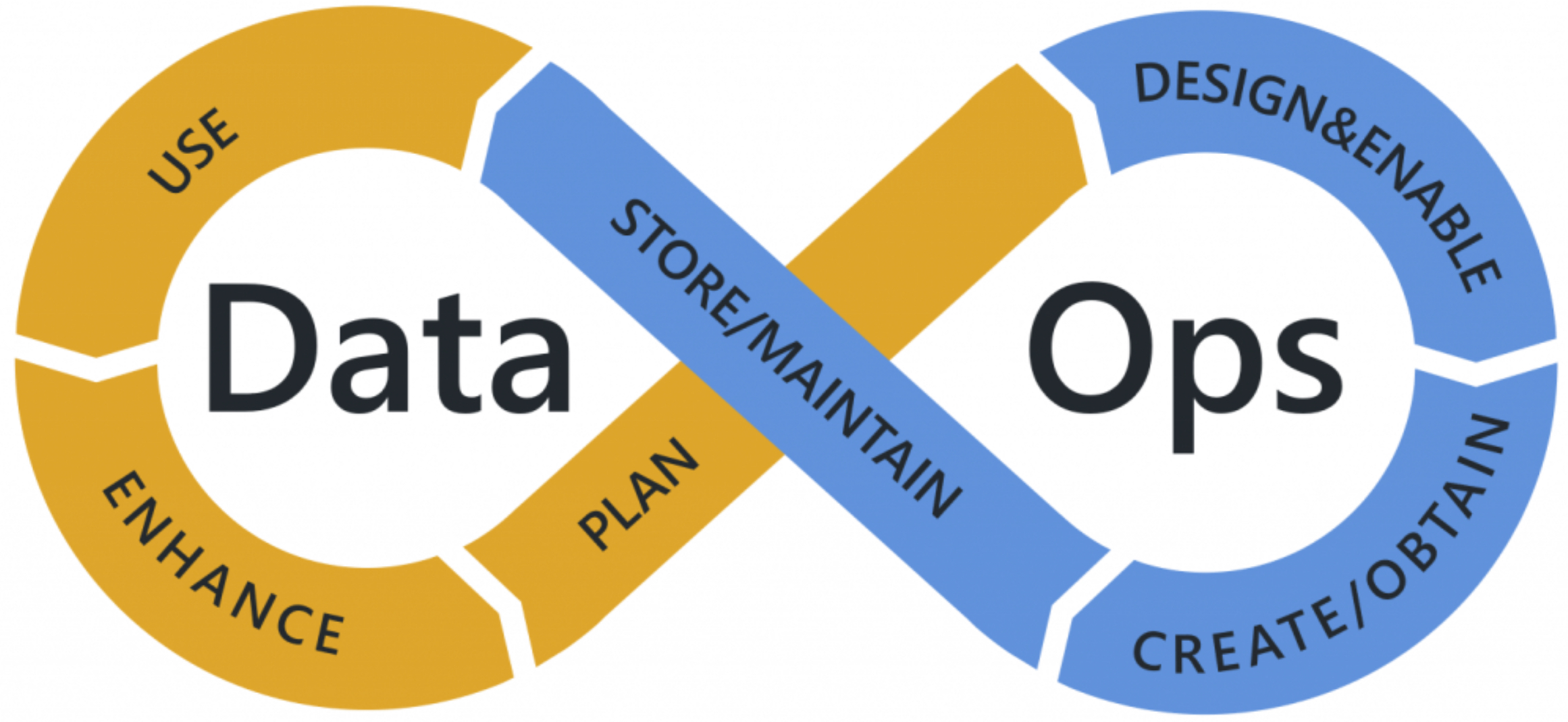

DataOps is an automated, process-oriented methodology used by analytic and data teams to improve the quality and reduce the cycle time of data analytics. Here’s a concise overview:

- Definition: DataOps combines DevOps, data engineering, and data analytics to streamline data management processes.

- Key goals:

- Improve collaboration between data scientists, engineers, and other stakeholders

- Automate data delivery pipelines

- Ensure data quality and consistency

- Reduce time from data generation to insight delivery

- Core principles:

- Continuous integration and delivery of data

- Automated testing and monitoring

- Version control for data and analytics code

- Emphasis on reusability and reproducibility

- Benefits:

- Faster time-to-insight

- Improved data quality

- Enhanced collaboration across teams

- Increased efficiency in data workflows

- Tools and practices:

- Data orchestration tools

- Version control systems

- Automated testing frameworks

- Containerization

DataOps aims to create a culture of continuous improvement in data-driven organizations, enabling them to respond more quickly to changing business needs and derive value from their data assets more effectively.

How DataOps differs from traditional data management approaches:

- Agility vs. Waterfall:

- Traditional: Often follows a waterfall model with distinct, sequential phases.

- DataOps: Embraces agile methodologies, allowing for iterative development and frequent releases.

- Automation:

- Traditional: Many processes are manual or semi-automated.

- DataOps: Emphasizes extensive automation in data pipelines, testing, and deployments.

- Collaboration:

- Traditional: Siloed teams with limited cross-functional interaction.

- DataOps: Promotes close collaboration between data scientists, engineers, and business users.

- Speed of Delivery:

- Traditional: Longer cycles from data collection to insight delivery.

- DataOps: Aims for rapid, continuous delivery of insights.

- Quality Control:

- Traditional: Quality checks often performed at the end of the process.

- DataOps: Continuous testing and monitoring throughout the data lifecycle.

- Flexibility:

- Traditional: Rigid structures that are slow to adapt to changes.

- DataOps: Flexible architectures that can quickly respond to new requirements.

- Version Control:

- Traditional: Limited version control, often focused on final outputs.

- DataOps: Version control for data, code, and environments throughout the process.

- Error Handling:

- Traditional: Reactive approach to errors, often discovered late in the process.

- DataOps: Proactive error detection and handling through automated testing.

- Scalability:

- Traditional: Often struggles with scaling to handle big data.

- DataOps: Designed to scale efficiently, leveraging cloud and distributed computing.

- Governance:

- Traditional: Centralized governance models.

- DataOps: Balanced approach with centralized standards and decentralized execution.

- Skill Set:

- Traditional: Specialized roles with deep expertise in specific areas.

- DataOps: Encourages T-shaped professionals with broad knowledge and specific deep skills.

- Technology Stack:

- Traditional: Often relies on monolithic, all-in-one solutions.

- DataOps: Favors modular, interoperable tools that can be easily integrated and replaced.

This shift in approach allows organizations to be more responsive to data needs, maintain higher data quality, and extract value from their data assets more efficiently. However, transitioning to DataOps can require significant changes in culture, processes, and technology infrastructure.

Let’s explore the challenges companies face when adopting DataOps:

- Cultural Resistance:

- Many organizations struggle with changing their established data management culture.

- Employees may resist new methodologies, fearing job obsolescence or increased workload.

- Breaking down silos between teams (e.g., data scientists, engineers, business analysts) can be difficult.

- Skill Gap:

- DataOps requires a blend of skills across data science, engineering, and DevOps.

- Finding or training employees with this diverse skill set can be challenging.

- Existing team members may need significant upskilling.

- Technology Integration:

- Implementing new tools and platforms for automation, testing, and monitoring can be complex.

- Integrating these with existing systems and legacy infrastructure often poses technical challenges.

- Ensuring interoperability between different tools in the DataOps stack can be difficult.

- Data Governance and Security:

- Balancing agility with proper data governance is a common struggle.

- Ensuring data security and compliance (e.g., GDPR, CCPA) in a more dynamic environment can be challenging.

- Maintaining data lineage and auditability in automated pipelines requires careful planning.

- Initial Investment:

- Adopting DataOps often requires significant upfront investment in tools, training, and potentially new hires.

- Convincing leadership of the long-term benefits versus short-term costs can be difficult.

- Complexity of Implementation:

- Designing and implementing automated data pipelines that cover the entire data lifecycle is complex.

- Setting up continuous integration/continuous delivery (CI/CD) for data projects can be more challenging than for software projects.

- Measuring Success:

- Defining and tracking meaningful metrics to measure the success of DataOps initiatives can be unclear.

- Demonstrating ROI, especially in the short term, can be challenging.

- Scalability Concerns:

- As data volumes and variety grow, ensuring that DataOps practices scale accordingly can be difficult.

- Maintaining performance and efficiency at scale requires ongoing optimization.

- Change Management:

- Transitioning from traditional methodologies to DataOps requires careful change management.

- Ensuring buy-in from all levels of the organization, from executives to individual contributors, can be challenging.

- Continuous Improvement:

- DataOps is not a “set it and forget it” solution. It requires ongoing refinement and optimization.

- Maintaining momentum and continuing to evolve practices over time can be difficult.

- Handling Data Quality:

- Implementing automated data quality checks that catch all potential issues without creating false positives is challenging.

- Balancing speed of delivery with thorough quality assurance can be tricky.

- Regulatory Compliance:

- Ensuring that agile DataOps practices comply with industry-specific regulations can be complex, especially in highly regulated sectors like finance or healthcare.

Overcoming these challenges often requires a combination of strategic planning, investment in people and technology, and a commitment to cultural change. Organizations that successfully navigate these hurdles can reap significant benefits in terms of data agility, quality, and value creation.